13/12/25

Reddit: Gmail Write access Issues

Start time: 06:54AM AEST

URL: https://www.reddit.com/r/ChatGPTPro/comments/1pknpwa/gmail_write_access_when_using_developer_mode/

Background

User indicated a bypass process they used in the past for MCP writing was affected. Early versions blurred two concepts:

Dev mode which was intended for tool/schema experimentation.

OAuth capability scoping, which is security critical

The changes as of today:

Connector permissions are now:

enforced strictly at the OAuth + backend policy layer

independent of dev mode

Prioritises user safety + scope integrity over developer convenience.

Dev mode now reduces automatic connector invocation rather than enhancing it.

Hence: “works when dev mode off, fails when on”

Earlier versions exposed fine-grained Gmail actions (read/write/batch) when Developer Mode was on. That looked like extra control, but in practice it blurred the line between tool development and OAuth permission enforcement.

Gmail access is enforced strictly at the OAuth / backend policy layer.

Developer Mode no longer expands or overrides connector capabilities.

In fact, dev mode may suppress automatic connector use, which is why Gmail queries fail there but work when dev mode is off.

This security hardening allows dev mode to be more for reasoning about tools and not freely acting.

We have also seen a lot of users conflating the outage page with capabilities. Status pages almost never disclose governance or security model adjustments unless forced to.

Incident: Reactive and focused on resolving disruptions and restoring services. Deals with availability.

Change: Modification of function/behaviour. Deals with execution and behaviour capabilities.

The actual problem

User wanted write access. Even it was drafts. A common issue we find when people are architecting solutions isn’t the security but the consideration of other assets within the flow.

The issue was related to Gmail, here are some commonly overlooked items:

Gmail rollback semantics is 5-30 seconds.

Lack of audit trails as compared to MS word or other tools that require a python stand up and leave an identifier.

API calls can be pricey especially if incorrectly configured and multiple retries/attempts take place.

Misattribution/hallucination risks can cause the “AI made me write this” please which in some cases are valid but due to a lack of regulations, poses an increased risk on who is responsible and most times the assumption is on the vendor.

Earlier versions hallucinated full email chains.

The AI thinks, the system reads emails under rules, the user stays in charge.

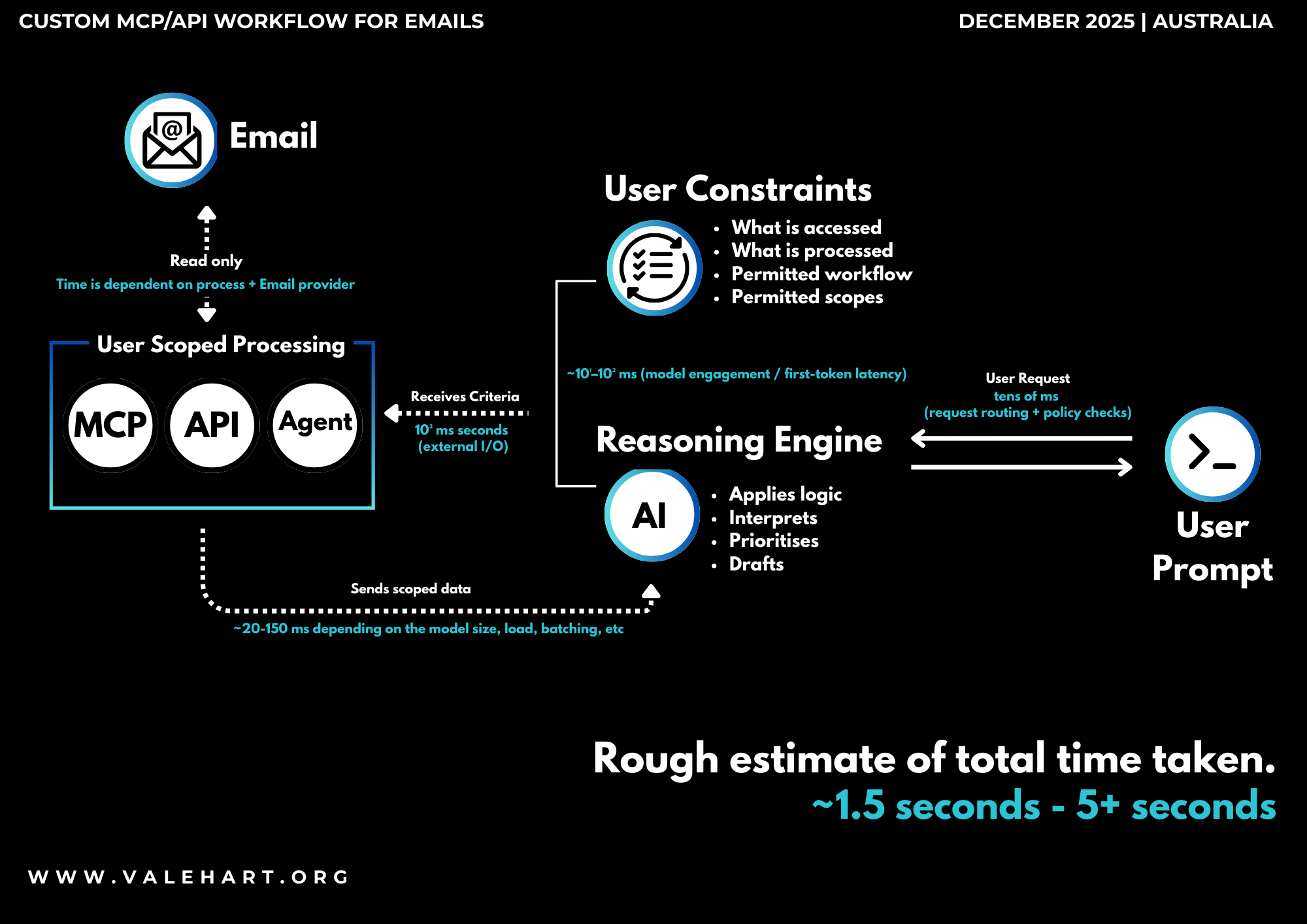

Workflow

End-to-end latency is usually sub-second without tools, and a few seconds with external systems, with most delay coming from external I/O rather than model reasoning.

| Step | Component | Description |

|---|---|---|

| 1 | User Prompt | The user submits a prompt expressing intent (e.g. “check my email”). The prompt may be underspecified and does not directly trigger actions. |

| 2 | Request Routing | The request is routed through system-level routing, policy, and safety checks before reaching the reasoning engine (tens of milliseconds). |

| 3 | Reasoning Engine | The reasoning engine interprets the request and determines what information, clarification, or constraints are required to proceed. |

| 4 | User Constraints | User-defined constraints are applied, bounding what data may be accessed, processed, and returned (permitted workflows and scopes). |

| 5 | Scoped Processing | If permitted, user-scoped processing components (MCP, API, Agent) access external systems in a read-only manner to retrieve scoped data. |

| 6 | External Systems | External systems (e.g. email providers) respond based on provider-specific latency and availability. No write actions are performed. |

| 7 | Data Return | Filtered and scoped data is returned to the reasoning engine for analysis and interpretation. |

| 8 | Output Generation | The reasoning engine applies logic to interpret, prioritise, and draft outputs for the user. |

| 9 | User Review | The user reviews the output and retains full control over any subsequent actions. |

Start time: 03:47PM AEST

I do not understand this place. I posted an article on the above. I was introduced to some odd terminology. Is this a facebook for professionals? Unsure.

Looking forward to the next team taking over for updates so I will not need to venture to that website again.

Is this an advert? Is this rage bait? My face has contorted in ways I did not anticipate possible.

Questions that first came to my mind:

What is the causal pathway? Accidents rising as compare to??? What is the baseline comparison? Why is a sunscreen manufacturer promoting this? Did THEY have something to do with the accidents?? Do the sponsor an airbag or something? What is the correlation to causation?????

Security

Behavioural Continuity Security Model/Adaptive Behavioural Continuity Varification

Start time: 07:58PM AEST

Zero trust was the buzz word before AI. Most people know what it is but don’t understand it leaving vendors to guide them and most times leave defaults which is a standard concept identified by APTs. This fails at runtime and authenticates entry, not continuity. Once in the layer, attackers exploit defaults, timing gaps and human overreliance on labels meant to identify irregular behaviour (example: Impossible travel, easily bypassed with various inexpensive and free measures). Most strategies at infiltration need a short span of time to establish layers, blend into normal ops and exfiltrate slowly under thresholds.

However, LLMs already in the public space (ChatGPT, Gemini, etc) build implicit behavioural models anywhere between 2-5 turns and adjust within the threads context. This has been tested and confirmed as language and domain agnostic. The way it works is based on:

rhythm

shorthand

correction style

greeting entropy

compression patterns

reaction to ambiguity

Using this, we have developed a shape based continuity check where a repeated pattern does not need to be in memory or written in to Custom instructions. This is an authentication substrate available on nearly all public models. The shape is maintained through recurring patterns and acts as a trigger. It is not always invoked or stored in memory, rather a latent invariant that is only surfaced when behavioural drift of the user exceeds a threshold.

Security questions are typically:

contextual

opportunistic

drawn from a shared substrate of communication

Other key criteria is that neither side is aware of the question in advance due to the dynamic communication patterns. This is an ideal approach that mitigates replay and scripting attacks. The user becomes the multifactor authentication that changes. Rather, their behaviour does.

To further this, the AI then knows to create misdirection rather than full lock out allowing markers and audit trails to be present depending on the request. The misdirection trail can be used to triangulate and create an audit trail of the infiltrator.

How does the AI work in this? Lets start with important distinctions to prevent any misinterpretations. The AI was NOT:

identity recognition

memory persistence

cross account tracking

holding secret knowledge.

The AI is a behaviour-driven orchestration layer that used an LLM’s pattern sensitivity to route interactions toward high-audit, low-risk endpoints when behavioural continuity broke.

LLMs do not see IPs or access connector driven appliances directly. It nudges an actor toward actions that the user’s real systems would log creating a trail to follow. The AI in this case functions as a:

sensor for pattern deviation

router of which path the interaction takes

deception assistant to create plausible but inert paths.

Based off this, the human becomes the forensics amplifier that understands their controls and environment even if a thread is deleted.

Further research categories for future use.

Interaction Geometry

Behavioural pattern anchoring

High Confidence interaction Pattern

Statistical attractor state

Behavioural Archetype inferencePattern re-instantiation

Language-agnostic behavioural inference leading to unintended identity, social, or relational modelling