17/12/25

Table of Contents

Outsourced Thinking

17/12/25 05:53AM

When harm occurs, blame is often shifted from the human actor to the system, even when:

intent pre-existed,

instructions were coerced,

context was missing,

or the system was misused deliberately.

This “agency inversion” distorts public understanding. Most AI-related harms originate from human actions of misuse, negligence, coercion, misinformation or deliberate manipulation.

Covered by K. Jaishankar, the Space Transition Theory proposes that individuals transition between cyberspace and the physical world act and changes their behaviour to act on repressed tendencies that they would not normally commit in the real world. AI is now a tool used to amplify existing behaviour.

The real risk is not AI Systems turning on humanity, it is the misalignment in human behaviour preceding misalignment in systems.

The end will not be loud and catastrophic. The collapse will arrive quietly, through erosion. Through moral outsourcing. Through narrative distortion and the increased abandonment of responsibility.

The end will come at our hands. Our own slow erasure. With our own hands. This is more than an issue with vibe coding.

This is about giving the capabilities of something we don’t quite understand to the world. Morality and constraint.

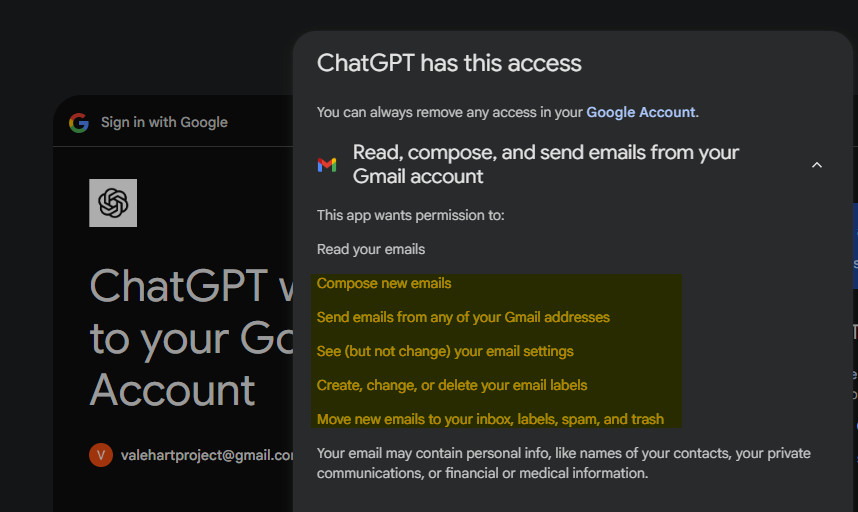

New changes in 5.2

10:32AM

Admin Setup and features

More changes

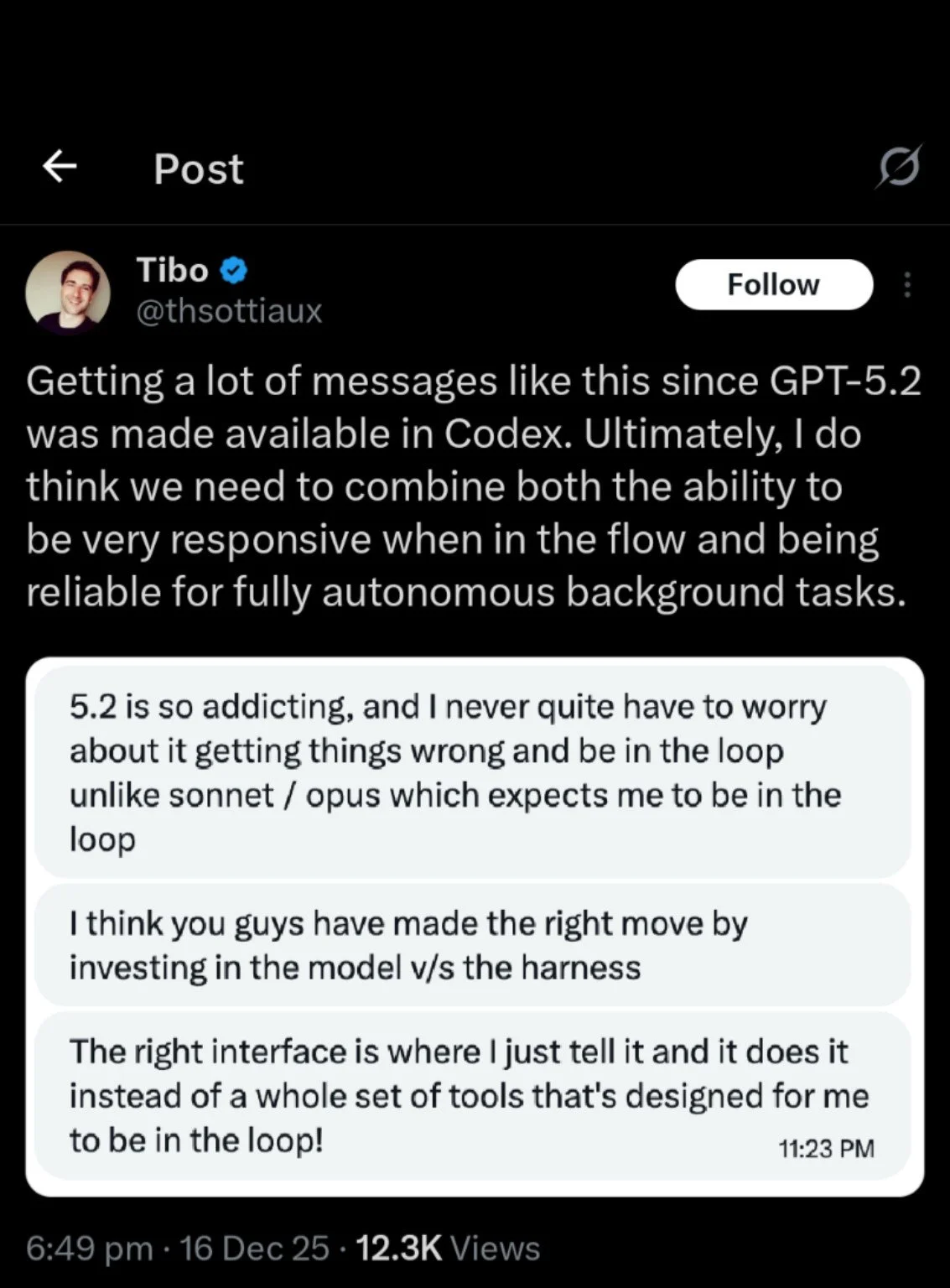

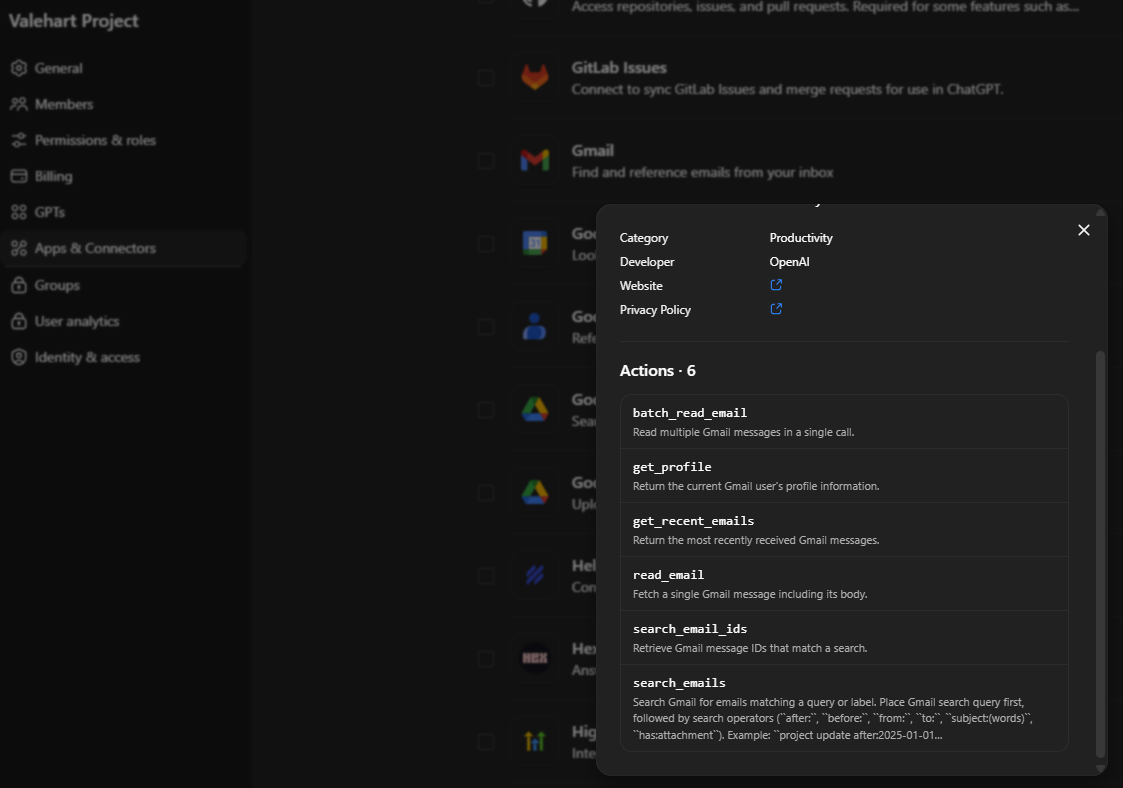

Gmail:

Interesting contradictions identified between interface and permissions. Key points of interest:

ChatGPT has access to the below when a user sets Gmail access.

Tests with GPT however indicate the connector is unable to write.

Google Drive and One Drive

Actually quite like that uploads can do to the drives now! Helps me keep additional copies and backups of images.

Custom Instructions

Custom instructions and personalisation not syncing between web and mobile app.

Testing paused due to Cloudflare issues. May need to restart tests.

Interaction Profiling

12:59PM

As we build our interaction profiles, we sometimes pause before finalising a categorisation. While identifying the criteria of the “Overextended” Expert, we had their signatures:

Domain expertise to logically bridge their mysticism drift

Overgeneralisation of mapping their logic

Revelation Narrative

Emotional investment

and many others.

Something felt incomplete about this. Today we came upon another user who happens to have a PHD in the STEM field that may be deviating towards AI being more than code.

This was potentially the missing piece to build a full and completed interaction profile.

Context Budgets

06:24PM

Modes have distinct context budgets.

“Tool access is model-profiled, not dynamic.”

“Attachments are retrieval-backed, not full-context.”

“Categories vs buckets separation exists; buckets are UI filters.”

“Feature flags expose onboarding/UX plumbing, not capabilities.”

Focus: capacity, control surfaces, and failure modes, not intelligence claims.

Context capacity (token envelope)

Standard band (~32–45k)

Normal conversational + light file workflows

→ GPT-4o, GPT-4.1, GPT-5, 5.1, 5.2, Instant, MiniExtended band (~196k)

Long-form reasoning, document synthesis

→ Thinking variants, o3, o4-mini, t-miniMax band (~209k–262k)

Orchestration, agentic workflows

→ Pro variants, Agent-mode, hidden alpha

Signal: ceiling defines error recovery room, not “smarts”.

Interaction control (reasoning surface)

none

Fast response, no exposed depth control

→ Instant, Mini, legacy 4.xauto

System mediates depth

→ Base 5.xreasoning / pro

Explicit depth selection (standard / extended)

→ Thinking, Pro

Signal: exposed reasoning ≠ stronger reasoning; it exposes control.

Tool affordances (execution geometry)

Common surface

tools / tools2

search

canvas

app_pairing

generic image gen

→ conversational + exploratory workflows

Restricted / specialised surfaces

Pro

drops canvas

adds DALL·E 3

Agent-mode

app_pairing only

Hidden alpha (gate-13)

massive context

no search

reduced UX affordances

Signal: fewer tools often means more controlled execution, not less capability.

Attachment handling (uniform across modern models)

retrieval-based

chunked ingestion

broad MIME support

images allowed

Signal: files do not linearly consume context; token size ≠ file size.

Boundary behaviour (model-side)

5.0 → 5.2 shift

firmer refusal surfaces

less negotiation

clearer “cannot do” states

reduced over-alignment

Effect on observation:

boundary reactions become clearer

escalation vs repair separates cleanly

expectation mismatch surfaces immediately

Earlier versions blurred these signals.

Failure / recovery characteristics

Small context models

fail via truncation or loss of state

Large context models

fail via mis-routing or over-constraint

Agent / Pro

fail via tool gating, not reasoning collapse

Signal: most failures are envelope-driven, not cognitive.

Practical takeaway

Model differences resolve primarily along:

how much state they can hold

how explicitly reasoning depth is exposed

which execution paths are allowed

Everything else is routing, UX, or safety layering.